Human Activity Detection

U-ActionNet is a unified multi-stage neural network framework for human action recognition from aerial videos that blends spatial and temporal streams. It is designed to capture long-term motion, appearance and behavior patterns effectively from aerial videos with complex human-object-human interactions.

🧠 Motivation

Aerial action recognition is particularly challenging due to:

- Complex object manipulation under occlusion and motion

- Diverse temporal lengths and scene compositions

- Multiple actors in the frame in non-trivial actions

Previous methods either:

- Focused on short-term features (e.g., optical flow, CNNs)

- Or lacked unified temporal modeling across long horizons

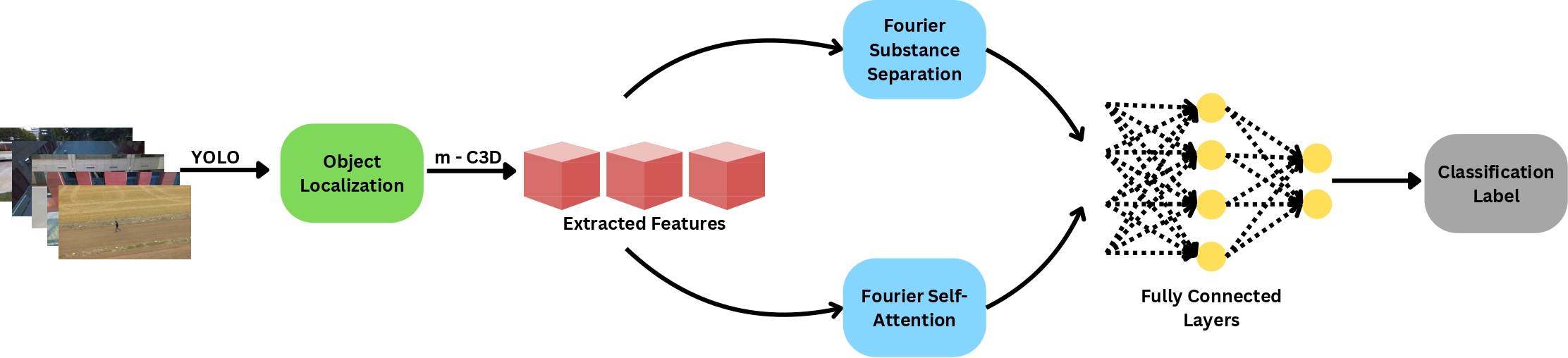

Flow of Human Action Recognition (HAR) framework employing Unmanned Aerial Vehicle (UAV) technology in surveillance systems

U-ActionNet addresses this gap by introducing:

- Multi-stage feature extraction with region of interest extraction using YOLO

- Fusion of spectral and temporal features to understand motion patterns

- Modular design to handle both short-term and long-term dependencies

- Edge device compatible low compute lightweight version

U-ActionNet architecture incorporates an Object Localization Block (green), m-C3D Block for feature extraction (red), Fourier Substance Separation Block (blue), Fourier Self-Attention Block (blue), and two Dense Layers (yellow)

⚙️ Key Features

- Multi-Stage Transformer Backbone:

- Region of Interest extraction using YOLO

- Feature Extraction using modified C3D module

- Fourier Based Action Recognition:

- Fourier Substance Separation module isolates dynamic action rich regions from static regions

- Fourier Self-Attention captures and memorises context from the aerial videos

- Compatibility:

- For the serverside heavy duty serverside model

- For edge device, key frame based lightweight model

📝 Citation

@article{chowdhury2024u,

title={U-ActionNet: Dual-pathway fourier networks with region-of-interest module for efficient action recognition in UAV surveillance},

author={Chowdhury, Abdul Monaf and Imran, Ahsan and Hasan, Md Mehedi and Ahmed, Riad and Azad, Akm and Alyami, Salem A},

journal={IEEE Access},

year={2024},

publisher={IEEE}

}