Open World Amodal Counting

I am working on the CAPTURe benchmark, which evaluates the ability of vision-language models (VLMs) to perform amodal counting—inferring the number of occluded objects based on visible patterns. This task reflects critical spatial reasoning skills that current VLMs largely lack.

My goal is to develop a system that improves counting accuracy under occlusion by enhancing pattern recognition, integrating visual and textual cues, and exploring hybrid approaches such as inpainting pipelines, coordinate extraction, or reasoning modules.

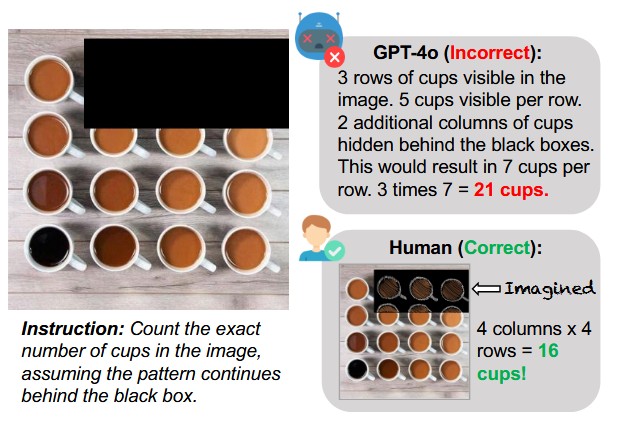

Occluded image example with an output from GPT4-o. People can easily infer the missing number of cups and correctly reason over occluded patterns, models generally struggle to reason over these occluded scenes.

---

Project is currently under review at CVPR 2026

---

📝 Citation

@article{arib2025counting,

title={Counting Through Occlusion: Framework for Open World Amodal Counting},

author={Arib, Safaeid Hossain and Akter, Rabeya and Chowdhury, Abdul Monaf and Sourov, Md Jubair Ahmed and Hasan, Md Mehedi},

journal={arXiv preprint arXiv:2511.12702},

year={2025}

}