Language Guided Embodied Agents

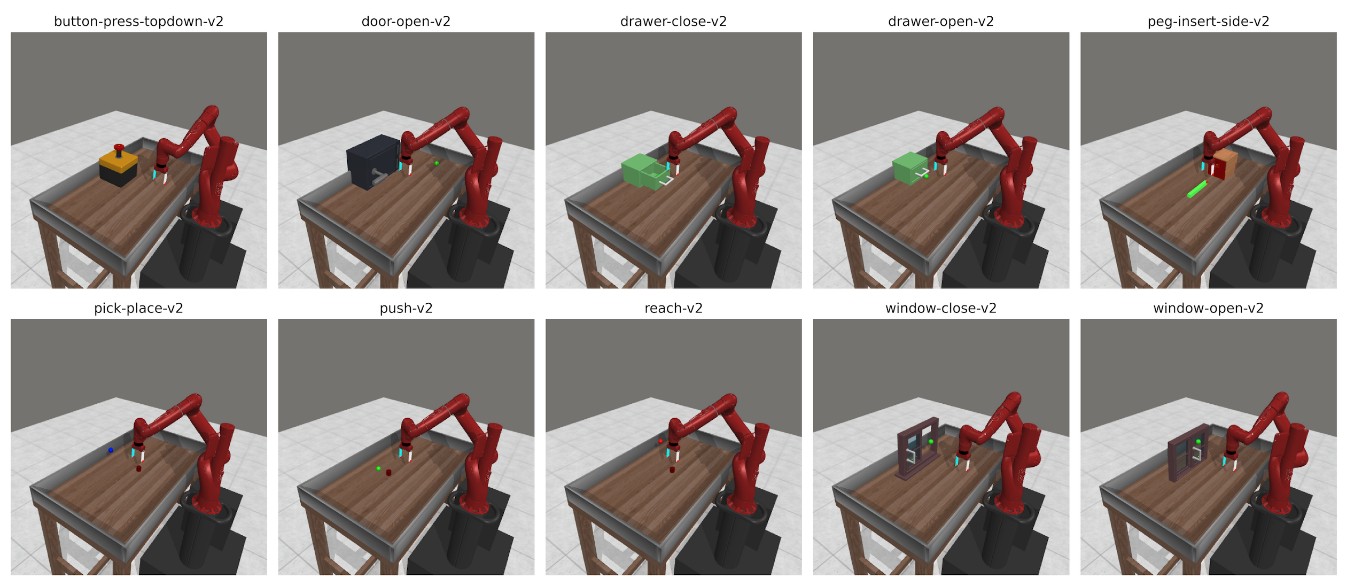

Context. Vision Language Models have been used as a reward model for embodied agents for quite some time. Visual cues such as images and textual instructions have been used to guide agents to solve rudimentary tasks. In some cases [furl], cosine similarity betweeen visual cues and textual instructions have been used to generate VLM based rewards and align the agent towards goal task.

But in the long duration sparse reward tasks visual and textual reward alignment isn’t enough. The agent fails at long duration complex task when rewards are provided at the end of the episode. Now the question becomes:

Can pretrained vision-language models provide reward signals and critique agent behavior in natural language?

To resolve this issue, I have been working on infusing VLM based textual feedback based on the work done on the episode. The feedback will provide historical context to the agent and provide guidance on what mistake the agent has done and what it needs to do correct its mistakes, as well as, what it should do to complete the task.

I have designed and integrated a Qwen 2.5VL-3B VLM driven “episodic reflection” module, automatically generating rich, natural-language self-assessments of each trial—highlighting successes and pinpointing failure causes—to provide the agent with human-like introspection. Episodic reflection will enable reward alignment towards the goal task. For the reward function, I have crafted a semantically aware dense grounded reward mode which fuses verbal reflections and CLIP-style vision–language alignment from task descriptions and goal images.

The agent is trained using the Soft Actor Critic algorithm where usually the state space is the visual observation. To enrich the representation capability of the agent, natural language feedback along with the visual observations are utilized as the state space to guide the agent towards goal task.

---

Project is currently under review at ICLR 2026

---

📝 Citation

@article{chowdhury2025lagea,

title={LAGEA: Language Guided Embodied Agents for Robotic Manipulation},

author={Chowdhury, Abdul Monaf and Mazumder, Akm Moshiur Rahman and Akter, Rabeya and Arib, Safaeid Hossain},

journal={arXiv preprint arXiv:2509.23155},

year={2025}

}